AI and the Retail Marketer’s Future

How AI transforms strategy and processes, driving the adoption of Positionless Marketing

Optimove Connect 2026: Join us in London on March 11–12 to master Positionless Marketing

Exclusive Forrester Report on AI in Marketing

When it comes to A/B testing – modern marketers know what it is and why it should be implemented into their campaigns. The problem is that most marketers still think in terms of winners or losers, even though with A/B testing, everyone can be a winner.

For you and your customers to be winners, however, you need to take more of a dynamic approach. Though one campaign may look like the winning one – it isn’t for everyone.

Yes, MOST of your customers may prefer it – but still, not ALL your customers do. The Optimove A/B testing approach considers that exact group of customers who actually prefer what you may perceive as a loser, but for them, it's their winner.

That’s the flaw in many marketers' use of A/B testing.

With Optimove, A/B testing allows you to ensure each customer gets the best option for them – as every customer is unique. Read on to learn more. We’re giving away the answers here, so you don’t need to study too hard.

At Optimove, understanding that customers always come first has a huge impact on A/B testing. Using this approach, you can send customers the most relevant campaign that works specifically for them – all the time.

Optimove’s AI learns your customers’ behaviors from each individual campaign in real time and adjusts them accordingly. This also means that marketers can test campaigns and creatives against each other by continuously deciding what is sent to each customer – to maximize uplift.

Self-Optimizing Campaign (SOC) is a particular type of A/B/n campaign at Optimove that’s comprised of two+ competing actions plus a control group for comparison. SOC learns and improves campaigns automatically via its results over time. It’s a great way to reduce guesswork around choosing the right campaign or creative and sending the optimal message to each audience member.

Get optimum results by implementing some of these best practices:

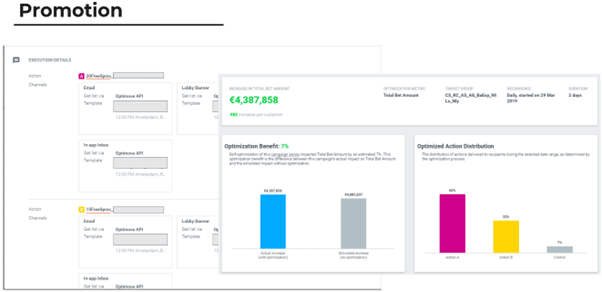

When A/B testing, one of the most common things to change is the promotion you send out in the template. Take, for example, an Optimove client, a leading gaming operator, who sent an email with two different offers – Group A received 20 free spins while Group B received 15 free spins. Everything else in the template was identical.

You can see below that many more customers received template A (60%) than template B (33%) and the control group (7%) as Optimove's AI determined the distribution of the offer and delivered it to recipients according to their personal promotional preference.

And the campaign is still running. 60% of users receive template A as it’s more relevant to them. They share the same behavioral DNA, and the campaign is automatically adjusted daily based on the players' behavior.

The best part? They squeezed an extra 7% out of this campaign (to date!) and generated an additional $300,000.

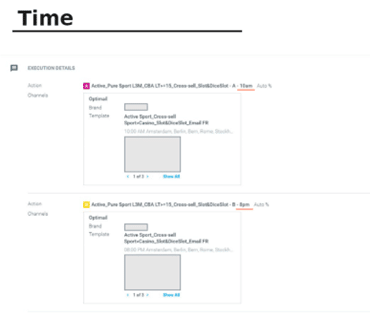

An additional Optimove gaming operator sent the same template to customers at two different times. So, template A and template B were identical; the only difference is that template A was sent at 10 AM and template B at 8 PM.

Again, the operator didn’t search for a winning action here but instead tried to identify and deliver the ideal communication that each player prefers. By doing so, this campaign generated an estimated 50% uplift in Total Bet Amounts.

By considering your player attributes throughout the entire execution of your campaigns, you’ll be able to communicate with them at their desired time of day.

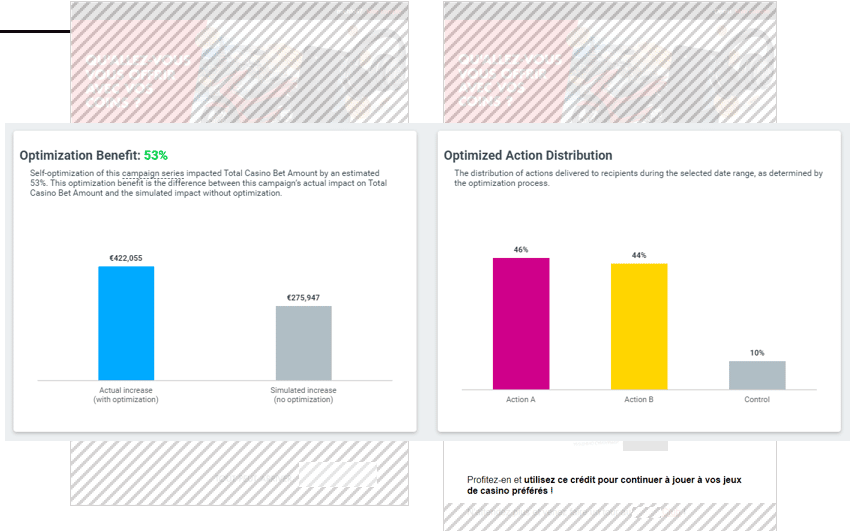

To compare which image works best in the template, a gaming operator changed just the banner at the top of its recurring email campaign series. Everything except the image at the top was exactly identical.

The operator saw an increase of an estimated 53% in Total Casino Bet Amounts. This uplift is the difference between this campaign’s actual impact on Total Casino Bet Amounts and the value they would have generated if they had sent a simple A/B test.

Pro tip: Try adding an additional banner to the same template – one at the top of the template and one at the bottom – to see whether this new template generates more clicks, higher response rates, and better overall engagement.

Remember, A/B testing is particularly important for recurring campaigns – as these are automatic campaigns that aren’t sent out on a specific day – and allow you to test a series of campaigns over time.

Now take some examples from industry best practices and implement them into your campaigns –we’re sure you’ll pass the test with an A+. Good luck!

Exclusive Forrester Report on AI in Marketing

In this proprietary Forrester report, learn how global marketers use AI and Positionless Marketing to streamline workflows and increase relevance.

Dafna is a content marketing manager and writer who generates branded content for online industries, specializing in lead generation, SEO, CRM, and lifecycle stage marketing.

With over ten years of professional writing experience, she helps brands grow and increase profitability, efficiency, and online presence. Dafna holds a B.A. in Persuasive Communications from Reichman University (IDC Herzliya).